Digital Services Act (DSA) overview

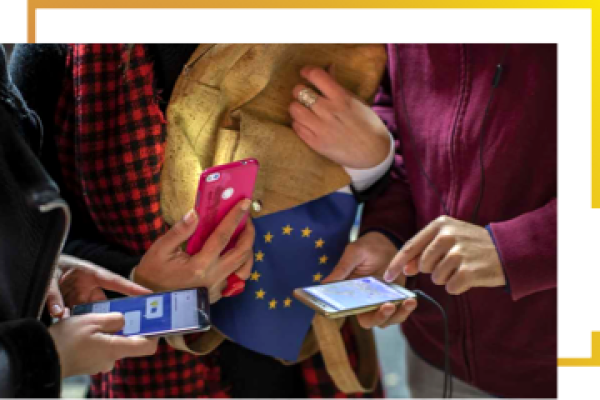

The DSA regulates online intermediaries and platforms such as marketplaces, social networks, content-sharing platforms, app stores, and online travel and accommodation platforms. Its main goal is to prevent illegal and harmful activities online and the spread of disinformation. It ensures user safety, protects fundamental rights, and creates a fair and open online platform environment.

What are the key goals of the Digital Services Act?

The DSA protects consumers and their fundamental rights online by setting clear and proportionate rules. It fosters innovation, growth and competitiveness, and facilitates the scaling up of smaller platforms, SMEs and start-ups. The roles of users, platforms, and public authorities are rebalanced according to European values, placing citizens at the centre.

For citizens

For citizens- better protection of fundamental rights

- more control and choice, and easier reporting of illegal content

- stronger protection of children online, such as the prohibition of targeted advertisement to minors

- less exposure to illegal content

- more transparency over content moderation decisions with the DSA Transparency Database

For providers of digital services

For providers of digital services- legal certainty

- a single set of rules across the EU

- easier to start-up and scale-up in Europe

For business users of digital services

For business users of digital services- access to EU-wide markets through platforms

- level-playing field against providers of illegal content

For society at large

For society at large- greater democratic control and oversight over systemic platforms

- mitigation of systemic risks, such as manipulation or disinformation

Which providers are covered?

The DSA includes rules for online intermediary services, which millions of Europeans use every day. The obligations of different online players match their role, size and impact in the online ecosystem.

Very large online platforms and search engines pose particular risks in the dissemination of illegal content and societal harms. Specific rules are foreseen for platforms reaching more than 10% of 450 million consumers in Europe.

- Online platforms bring together sellers and consumers such as online marketplaces, app stores, collaborative economy platforms and social media platforms.

- Hosting services such as cloud and web hosting services (also including online platforms).

- Intermediary services offering network infrastructure: Internet access providers and domain name registrars (also including hosting services).

All online intermediaries offering their services in the single market, whether they are established in the EU or outside, have to comply with the new rules. Micro and small companies have obligations proportionate to their ability and size while ensuring they remain accountable. In addition, even if micro and small companies grow significantly, they would benefit from a targeted exemption from a set of obligations during a transitional 12-month period.

Digital Services Act entering into force

As of 17 February 2024, the DSA rules apply to all platforms. Since the end of August 2023, these rules had already applied to designated platforms with more than 45 million users in the EU (10% of the EU’s population), the so-called Very large online platforms (VLOPs) or Very large online search engines (VLOSEs).

The Commission will enforce the DSA together with national authorities, who will supervise the compliance of the platforms established in their territory. The Commission is primarily responsible for the monitoring and enforcement of the additional obligations applying to VLOPs and VLOSEs, such as the measures to mitigate systemic risks.